Consider a world where metrics and dashboards do not exist, where your work is free from constraints and you have the freedom to explore your imagination, creativity, and innovative ideas without being tethered to anything.

It may sound like a utopian vision that anyone would crave, right? But, it is not a sentiment shared by business owners and managers. They operate in a world where OKRs, KPIs, and accountability define performance. In this environment, dreaming and fairy tales have no place.

Given that distributed teams are becoming more prevalent and the demand for rapid development is skyrocketing, managers seek ways to maintain control. Managers have started favoring “DORA metrics” to achieve this goal in development teams. By tracking and trying to enhance these metrics, managers feel as though they have some degree of authority over their engineering team’s performance and culture.

But, here’s a message for all the managers out there on behalf of developers – DORA metrics alone are insufficient and won’t provide you with the help you require.

What are DORA metrics?

Before we understand, why DORA is insufficient today, let’s understand what are they!

The widely used reference book for engineering leaders called Accelerate introduced the DevOps Research and Assessment (DORA) group’s four metrics, known as the DORA 4 metrics.

These metrics were developed to assist engineering teams in determining two things: A) The characteristics of a top-performing team, and B) How their performance compares to the rest of the industry.

The four key metrics are as follows:

Deployment Frequency:

This metric measures the frequency of deployment of code to production or releases to end-users in a given time frame. It may include the consideration of code review. As it assesses code changes before they are integrated into a production environment.

Lead Time for Changes:

This metric measures the time between a commit being made and that commit making it to production. It helps in understanding the effectiveness of the development process once coding has been initiated.

Mean Time to Restore:

This metric is also known as the mean time to recovery. It measures the time required to solve the incident i.e. service incident or defect impacting end-users. To lower it, the team must improve their observation skills so that failures can be detected and resolved quickly.

Change Failure Rate:

Change failure rate measures the proportion of deployment to production that results in degraded services. It should be kept as low as possible as it will signify successful debugging practices and thorough testing and problem-solving.

In their words:

“Deployment Frequency and Lead Time for Changes measure velocity, while Change Failure Rate and Time to Restore Service measure stability. And by measuring these values, and continuously iterating to improve on them, a team can achieve significantly better business outcomes.”

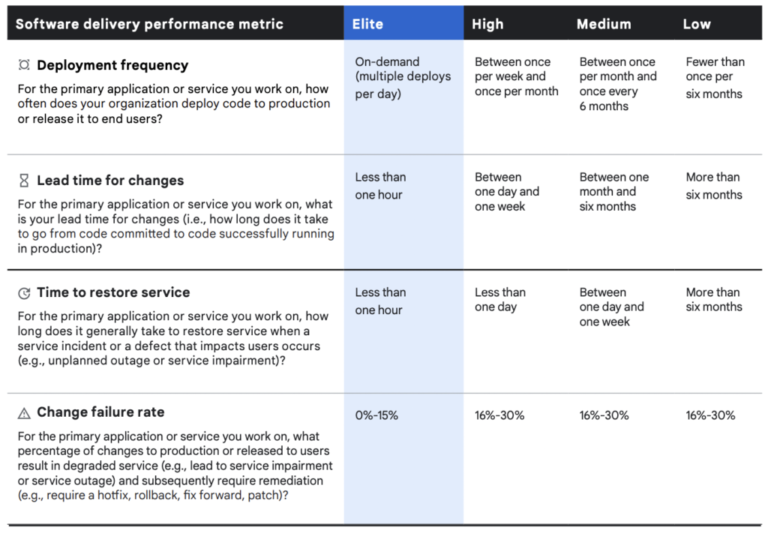

Below are the performance metrics categorized in

Below are the performance metrics categorized in

- Elite performers

- High performers

- Medium performers

- Low performers

for 4 metrics –

Want to implement DORA metrics for improving dev visibility and performance?

What are the challenges of DORA metrics?

It doesn't take into consideration all the factors that add to the success of the development process:

DORA metrics are a useful tool for tracking and comparing DevOps team performance. Unfortunately, it doesn’t take into account all the factors for a successful software development process. For example, assessing coding skills across teams can be challenging due to varying levels of expertise. These metrics also overlook the actual efforts behind the scenes, such as debugging, feature development, and more.

It doesn't provide full context:

While DORA metrics tell us which metric is low or high, it doesn’t reveal the reason behind it. Suppose, there is an increase in lead time for changes, it could be due to various reasons. For example, DORA metrics might not reflect the effectiveness of feedback provided during code review. Hence, overlooking the true impact and value of the code review process.

The software development landscape is constantly evolving:

The software development landscape is changing rapidly. Hence, the DORA metrics may not be able to quickly adapt to emerging programming practices, coding standards, and other software trends. For instance, Code review has evolved to include not only traditional peer reviews but also practices like automated code analysis. DORA metrics may not be able to capture the new approaches fully. Hence, it may not be able to assess the effectiveness of these reviews properly.

It is not meant for every team:

DORA metrics are a great tool for analyzing DevOps performance. But, It doesn’t mean they are relevant to every developer’s team. These metrics work best when the deployment is done frequently, can quickly iterate on changes, and improve accordingly. For example, if your team adheres to certain coding standards or ship software monthly, it will result in low deployment frequency almost every time.

Why you’ve been using DORA wrongly?

Relying solely on DORA metrics to evaluate software teams’ performance has limited value. Leaders must now move beyond these metrics, identify patterns, and obtain a comprehensive understanding of all factors that impact the software development life cycle (SDLC).

For example, if a team’s cycle time varies and exceeds three days, while all other metrics remain constant, managers must investigate deployment issues, the time it takes for pull requests to be approved, the review process, or a decrease in a developer’s productivity.

If a developer is not coding as many days, what is the reason behind this? Is it due to technical debt, frequent switching between tasks, or some other factor that hasn’t yet been identified? Therefore, leaders need to look beyond the DORA metrics and understand the underlying reasons behind any deviations or trends in performance.

Combine DORA metrics with other engineering analytics:

For DORA to produce reliable results, it is crucial for the team to have a clear understanding of the metrics they are using and why they are using them. DORA can provide similar results for teams with similar deployment patterns. But, it is also essential to use the data to advance the team’s performance rather than simply relying on the numbers. Combining DORA with other engineering analytics is a great way to gain a complete picture of the development process. It may include identifying bottlenecks and improvement areas. It may include including identifying bottlenecks and improvement areas.

Use other indexes along with DORA metrics:

However, poor interpretation of DORA data can occur due to the lack of uniformity in defining failure, which is a challenge for metrics like CFR and MTTR. Using custom information to interpret the results is often ineffective. Additionally, DORA metrics only focus on velocity and stability. It does not consider other factors such as the quality of work, productivity of developers, and the impact on the end-user. So, it is important to use other indexes for a proactive response, qualitative analysis of workflows, and SDLC predictability. It will help to gain a 360-degree profiling of the team’s workflow.

Use it as a tool for continuous improvement and increase value delivery:

To achieve business goals, it is essential to correlate DORA data with other critical indicators like review time, code churn, maker time, PR size, and more. Using DORA in combination with more context, customization, and traceability can offer a true picture of the team’s performance and identify the steps needed to resolve bottlenecks and hidden fault lines at all levels. Ultimately, DORA should be used as a tool for continuous improvement, product management, and enhancing value delivery.

DORA metrics can also provide insights into coding skills by revealing patterns related to code quality, review effectiveness, and debugging cycles. This can help in identifying the blind spots where additional training is required.

Conclusion

While DORA serves its purpose well, it is only the beginning of improving engineering excellence. Looking at numbers alone is not enough. Engineering managers should also focus on the practices and people behind the numbers and the barriers they face to achieve their best. It is a known fact that engineering excellence is related to a team’s productivity and well-being. So, it is crucial to consider all factors that impact a team’s performance and take appropriate steps to address them.