Software product metrics measure quality, performance, and user satisfaction, aligning with business goals to improve your software. This article explains essential metrics and their role in guiding development decisions.

Software product metrics are quantifiable measurements that assess various characteristics and performance aspects of software products. These metrics are designed to align with business goals, add user value, and ensure the proper functioning of the product. Tracking these critical metrics ensures your software meets quality standards, performs reliably, and fulfills user expectations. User Satisfaction metrics include Net Promoter Score (NPS), Customer Satisfaction Score (CSAT), and Customer Effort Score (CES), which provide valuable insights into user experiences and satisfaction levels. User Engagement metrics include Active Users, Session Duration, and Feature Usage, which help teams understand how users interact with the product. Additionally, understanding software metric product metrics in software is essential for continuous improvement.

Evaluating quality, performance, and effectiveness, software metrics guide development decisions and align with user needs. They provide insights that influence development strategies, leading to enhanced product quality and improved developer experience and productivity. These metrics help teams identify areas for improvement, assess project progress, and make informed decisions to enhance product quality.

Quality software metrics reduce maintenance efforts, enabling teams to focus on developing new features and enhancing user satisfaction. Comprehensive insights into software health help teams detect issues early and guide improvements, ultimately leading to better software. These metrics serve as a compass, guiding your development team towards creating a robust and user-friendly product.

Software quality metrics are essential quantitative indicators that evaluate the quality, performance, maintainability, and complexity of software products. These quantifiable measures enable teams to monitor progress, identify challenges, and adjust strategies in the software development process. Additionally, metrics in software engineering play a crucial role in enhancing overall software product’s quality.

By measuring various aspects such as functionality, reliability, and usability, quality metrics ensure that software systems meet user expectations and performance standards. The following subsections delve into specific key metrics that play a pivotal role in maintaining high code quality and software reliability.

Defect density is a crucial metric that helps identify problematic areas in the codebase by measuring the number of defects per a specified amount of code. Typically measured in terms of Lines of Code (LOC), a high defect density indicates potential maintenance challenges and higher defect risks. Pinpointing areas with high defect density allows development teams to focus on improving those sections, leading to a more stable and reliable software product and enhancing defect removal efficiency.

Understanding and reducing defect density is essential for maintaining high code quality. It provides a clear picture of the software’s health and helps teams prioritize bug fixes and software defects. Consistent monitoring allows teams to proactively address issues, enhancing the overall quality and user satisfaction of the software product.

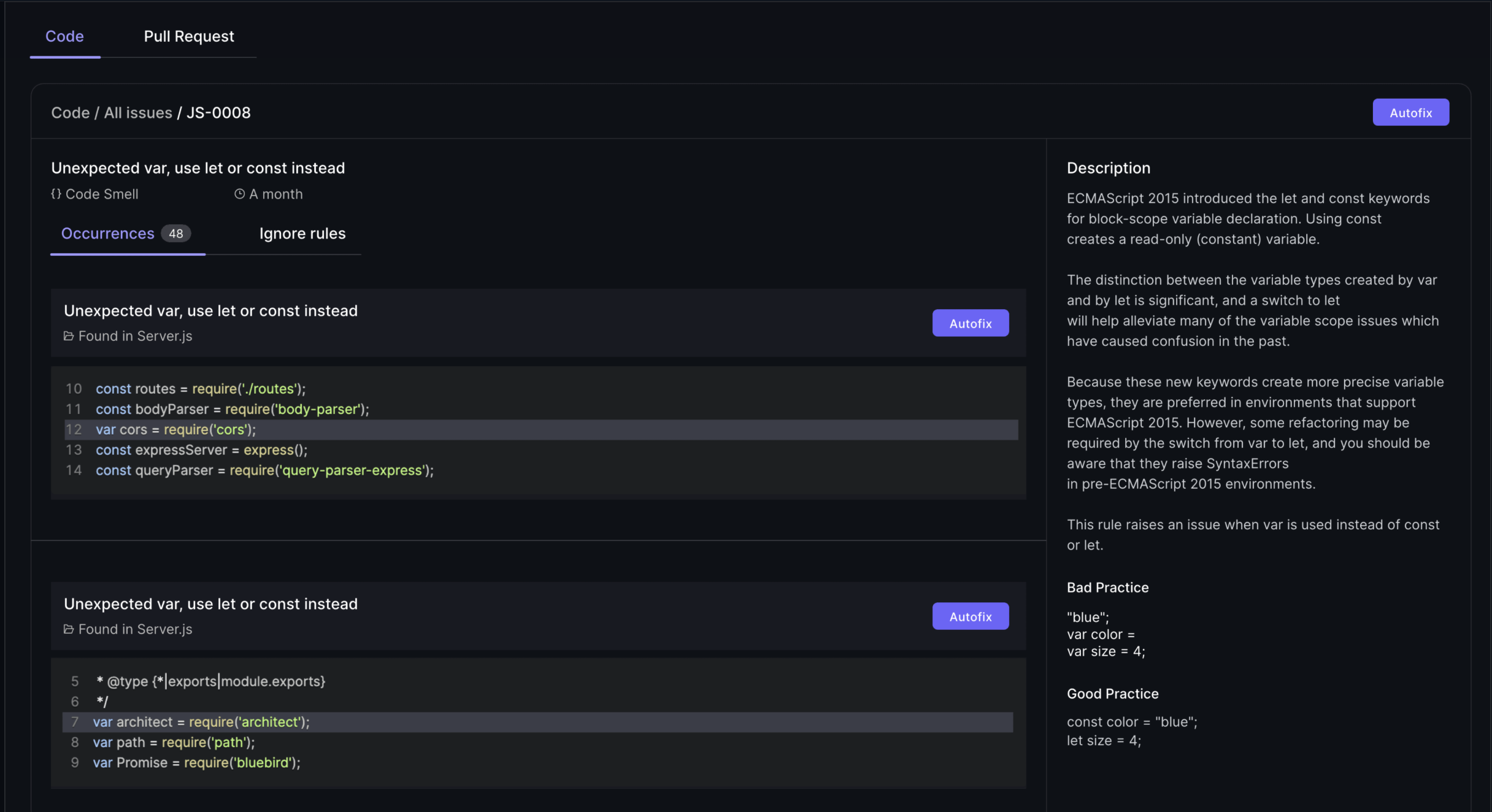

Code coverage is a metric that assesses the percentage of code executed during testing, ensuring adequate test coverage and identifying untested parts. Static analysis tools like SonarQube, ESLint, and Checkstyle play a crucial role in maintaining high code quality by enforcing consistent coding practices and detecting potential vulnerabilities before runtime. These tools are integral to the software development process, helping teams adhere to code quality standards and reduce the likelihood of defects.

Maintaining high code quality through comprehensive code coverage leads to fewer defects and improved code maintainability. Software quality management platforms that facilitate code coverage analysis include:

The Maintainability Index is a metric that provides insights into the software’s complexity, readability, and documentation, all of which influence how easily a software system can be modified or updated. Metrics such as cyclomatic complexity, which measures the number of linearly independent paths in code, are crucial for understanding the complexity of the software. High complexity typically suggests there may be maintenance challenges ahead. It also indicates a greater risk of defects.

Other metrics like the Length of Identifiers, which measures the average length of distinct identifiers in a program, and the Depth of Conditional Nesting, which measures the depth of nesting of if statements, also contribute to the Maintainability Index. These metrics help identify areas that may require refactoring or documentation improvements, ultimately enhancing the maintainability and longevity of the software product.

Performance and reliability metrics are vital for understanding the software’s ability to perform under various conditions over time. These metrics provide insights into the software’s stability, helping teams gauge how well the software maintains its operational functions without interruption. By implementing rigorous software testing and code review practices, teams can proactively identify and fix defects, thereby improving the software’s performance and reliability.

The following subsections explore specific essential metrics that are critical for assessing performance and reliability, including key performance indicators and test metrics.

Mean Time Between Failures (MTBF) is a key metric used to assess the reliability and stability of a system. It calculates the average time between failures, providing a clear indication of how often the system can be expected to fail. A higher MTBF indicates a more reliable system, as it means that failures occur less frequently.

Tracking MTBF helps teams understand the robustness of their software and identify potential areas for improvement. Analyzing this metric helps development teams implement strategies to enhance system reliability, ensuring consistent performance and meeting user expectations.

Mean Time to Repair (MTTR) reflects the average duration needed to resolve issues after system failures occur. This metric encompasses the total duration from system failure to restoration, including repair and testing times. A lower MTTR indicates that the system can be restored quickly, minimizing downtime and its impact on users. Additionally, Mean Time to Recovery (MTTR) is a critical metric for understanding how efficiently services can be restored after a failure, ensuring minimal disruption to users.

Understanding MTTR is crucial for evaluating the effectiveness of maintenance processes. It provides insights into how efficiently a development team can address and resolve issues, ultimately contributing to the overall reliability and user satisfaction of the software product.

Response time measures the duration taken by a system to react to user commands, which is crucial for user experience. A shorter response time indicates a more responsive system, enhancing user satisfaction and engagement. Measuring response time helps teams identify performance bottlenecks that may negatively affect user experience.

Ensuring a quick response time is essential for maintaining high user satisfaction and retention rates. Performance monitoring tools can provide detailed insights into response times, helping teams optimize their software to deliver a seamless and efficient user experience.

User engagement and satisfaction metrics are vital for assessing how users interact with a product and can significantly influence its success. These metrics provide critical insights into user behavior, preferences, and satisfaction levels, helping teams refine product features to enhance user engagement.

Tracking these metrics helps development teams identify areas for improvement and ensures the software meets user expectations. The following subsections explore specific metrics that are crucial for understanding user engagement and satisfaction.

Net Promoter Score (NPS) is a widely used gauge of customer loyalty, reflecting how likely customers are to recommend a product to others. It is calculated by subtracting the percentage of detractors from the percentage of promoters, providing a clear metric for customer loyalty. A higher NPS indicates that customers are more satisfied and likely to promote the product.

Tracking NPS helps teams understand customer satisfaction levels and identify areas for improvement. Focusing on increasing NPS helps development teams enhance user satisfaction and retention, leading to a more successful product.

The number of active users reflects the software’s ability to retain user interest and engagement over time. Tracking daily, weekly, and monthly active users helps gauge the ongoing interest and engagement levels with the software. A higher number of active users indicates that the software is effectively meeting user needs and expectations.

Understanding and tracking active users is crucial for improving user retention strategies. Analyzing user engagement data helps teams enhance software features and ensure the product continues to deliver value.

Tracking how frequently specific features are utilized can inform development priorities based on user needs and feedback. Analyzing feature usage reveals which features are most valued and frequently utilized by users, guiding targeted enhancements and prioritization of development resources.

Monitoring specific feature usage helps development teams gain insights into user preferences and behavior. This information helps identify areas for improvement and ensures that the software evolves in line with user expectations and demands.

Financial metrics are essential for understanding the economic impact of software products and guiding business decisions effectively. These metrics help organizations evaluate the economic benefits and viability of their software products. Tracking financial metrics helps development teams make informed decisions that contribute to the financial health and sustainability of the software product. Tracking metrics such as MRR helps Agile teams understand their product's financial health and growth trajectory.

The following subsections explore specific financial metrics that are crucial for evaluating software development.

Customer Acquisition Cost (CAC) represents the total cost of acquiring a new customer, including marketing expenses and sales team salaries. It is calculated by dividing total sales and marketing costs by the number of new customers acquired. A high customer acquisition costs (CAC) shows that targeted marketing strategies are necessary. It also suggests that enhancements to the product’s value proposition may be needed.

Understanding CAC is crucial for optimizing marketing efforts and ensuring that the cost of acquiring new customers is sustainable. Reducing CAC helps organizations improve overall profitability and ensure the long-term success of their software products.

Customer lifetime value (CLV) quantifies the total revenue generated from a customer. This measurement accounts for the entire duration of their relationship with the product. It is calculated by multiplying the average purchase value by the purchase frequency and lifespan. A healthy ratio of CLV to CAC indicates long-term value and sustainable revenue.

Tracking CLV helps organizations assess the long-term value of customer relationships and make informed business decisions. Focusing on increasing CLV helps development teams enhance customer satisfaction and retention, contributing to the financial health of the software product.

Monthly recurring revenue (MRR) is predictable revenue from subscription services generated monthly. It is calculated by multiplying the total number of paying customers by the average revenue per customer. MRR serves as a key indicator of financial health, representing consistent monthly revenue from subscription-based services.

Tracking MRR allows businesses to forecast growth and make informed financial decisions. A steady or increasing MRR indicates a healthy subscription-based business, while fluctuations may signal the need for adjustments in pricing or service offerings.

Selecting the right metrics for your project is crucial for ensuring that you focus on the most relevant aspects of your software development process. A systematic approach helps identify the most appropriate product metrics that can guide your development strategies and improve the overall quality of your software. Activation rate tracks the percentage of users who complete a specific set of actions consistent with experiencing a product's core value, making it a valuable metric for understanding user engagement.

The following subsections provide insights into key considerations for choosing the right metrics.

Metrics selected should directly support the overarching goals of the business to ensure actionable insights. By aligning metrics with business objectives, teams can make informed decisions that drive business growth and improve customer satisfaction. For example, if your business aims to enhance user engagement, tracking metrics like active users and feature usage will provide valuable insights.

A data-driven approach ensures that the metrics you track provide objective data that can guide your marketing strategy, product development, and overall business operations. Product managers play a crucial role in selecting metrics that align with business goals, ensuring that the development team stays focused on delivering value to users and stakeholders.

Clear differentiation between vanity metrics and actionable metrics is essential for effective decision-making. Vanity metrics may look impressive but do not provide insights or drive improvements. In contrast, actionable metrics inform decisions and strategies to enhance software quality. Vanity Metrics should be avoided; instead, focus on actionable metrics tied to business outcomes to ensure meaningful progress and alignment with organizational goals.

Using the right metrics fosters a culture of accountability and continuous improvement within agile teams. By focusing on actionable metrics, development teams can track progress, identify areas for improvement, and implement changes that lead to better software products. This balance is crucial for maintaining a metrics focus that drives real value.

As a product develops, the focus should shift to metrics that reflect user engagement and retention in line with our development efforts. Early in the product lifecycle, metrics like user acquisition and activation rates are crucial for understanding initial user interest and onboarding success.

As the product matures, metrics related to user satisfaction, feature usage, and retention become more critical. Metrics should evolve to reflect the changing priorities and challenges at each stage of the product lifecycle.

Continuous tracking and adjustment of metrics ensure that development teams remain focused on the most relevant aspects of project management in the software, leading to sustained tracking product metrics success.

Having the right tools for tracking and visualizing metrics is essential for automatically collecting raw data and providing real-time insights. These tools act as diagnostics for maintaining system performance and making informed decisions.

The following subsections explore various tools that can help track software metrics and visualize process metrics and software metrics effectively.

Static analysis tools analyze code without executing it, allowing developers to identify potential bugs and vulnerabilities early in the development process. These tools help improve code quality and maintainability by providing insights into code structure, potential errors, and security vulnerabilities. Popular static analysis tools include Typo, SonarQube, which provides comprehensive code metrics, and ESLint, which detects problematic patterns in JavaScript code.

Using static analysis tools helps development teams enforce consistent coding practices and detect issues early, ensuring high code quality and reducing the likelihood of software failures.

Dynamic analysis tools execute code to find runtime errors, significantly improving software quality. Examples of dynamic analysis tools include Valgrind and Google AddressSanitizer. These tools help identify issues that may not be apparent in static analysis, such as memory leaks, buffer overflows, and other runtime errors.

Incorporating dynamic analysis tools into the software engineering development process helps ensure reliable software performance in real-world conditions, enhancing user satisfaction and reducing the risk of defects.

Performance monitoring tools track performance, availability, and resource usage. Examples include:

Insights from performance monitoring tools help identify performance bottlenecks and ensure adherence to SLAs. By using these tools, development teams can optimize system performance, maintain high user engagement, and ensure the software meets user expectations, providing meaningful insights.

AI coding assistants do accelerate code creation, but they also introduce variability in style, complexity, and maintainability. The bottleneck has shifted from writing code to understanding, reviewing, and validating it.

Effective AI-era code reviews require three things:

AI coding reviews are not “faster reviews.” They are smarter, risk-aligned reviews that help teams maintain quality without slowing down the flow of work.

Understanding and utilizing software product metrics is crucial for the success of any software development project. These metrics provide valuable insights into various aspects of the software, from code quality to user satisfaction. By tracking and analyzing these metrics, development teams can make informed decisions, enhance product quality, and ensure alignment with business objectives.

Incorporating the right metrics and using appropriate tools for tracking and visualization can significantly improve the software development process. By focusing on actionable metrics, aligning them with business goals, and evolving them throughout the product lifecycle, teams can create robust, user-friendly, and financially successful software products. Using tools to automatically collect data and create dashboards is essential for tracking and visualizing product metrics effectively, enabling real-time insights and informed decision-making. Embrace the power of software product metrics to drive continuous improvement and achieve long-term success.

Software product metrics are quantifiable measurements that evaluate the performance and characteristics of software products, aligning with business goals while adding value for users. They play a crucial role in ensuring the software functions effectively.

Defect density is crucial in software development as it highlights problematic areas within the code by quantifying defects per unit of code. This measurement enables teams to prioritize improvements, ultimately reducing maintenance challenges and mitigating defect risks.

Code coverage significantly enhances software quality by ensuring that a high percentage of the code is tested, which helps identify untested areas and reduces defects. This thorough testing ultimately leads to improved code maintainability and reliability.

Tracking active users is crucial as it measures ongoing interest and engagement, allowing you to refine user retention strategies effectively. This insight helps ensure the software remains relevant and valuable to its users. A low user retention rate might suggest a need to improve the onboarding experience or add new features.

AI coding reviews enhance the software development process by optimizing coding speed and maintaining high code quality, which reduces human error and streamlines workflows. This leads to improved efficiency and the ability to quickly identify and address bottlenecks.

.png)

Over the past two years, LLMs have moved from interesting experiments to everyday tools embedded deeply in the software development lifecycle. Developers use them to generate boilerplate, draft services, write tests, refactor code, explain logs, craft documentation, and debug tricky issues. These capabilities created a dramatic shift in how quickly individual contributors can produce code. Pull requests arrive faster. Cycle time shrinks. Story throughput rises. Teams that once struggled with backlog volume can now push changes at a pace that was previously unrealistic.

If you look only at traditional engineering dashboards, this appears to be a golden age of productivity. Nearly every surface metric suggests improvement. Yet many engineering leaders report a very different lived reality. Roadmaps are not accelerating at the pace the dashboards imply. Review queues feel heavier, not lighter. Senior engineers spend more time validating work rather than shaping the system. Incidents take longer to diagnose. And teams who felt energised by AI tools in the first few weeks begin reporting fatigue a few months later.

This mismatch is not anecdotal. It reflects a meaningful change in the nature of engineering work. Productivity did not get worse. It changed form. But most measurement models did not.

This blog unpacks what actually changed, why traditional metrics became misleading, and how engineering leaders can build a measurement approach that reflects the real dynamics of LLM-heavy development. It also explains how Typo provides the system-level signals leaders need to stay grounded as code generation accelerates and verification becomes the new bottleneck.

For most of software engineering history, productivity tracked reasonably well to how efficiently humans could move code from idea to production. Developers designed, wrote, tested, and reviewed code themselves. Their reasoning was embedded in the changes they made. Their choices were visible in commit messages and comments. Their architectural decisions were anchored in shared team context.

When developers wrote the majority of the code, it made sense to measure activity:

how quickly tasks moved through the pipeline, how many PRs shipped, how often deployments occurred, and how frequently defects surfaced. The work was deterministic, so the metrics describing that work were stable and fairly reliable.

This changed the moment LLMs began contributing even 30 to 40 percent of the average diff.

Now the output reflects a mixture of human intent and model-generated patterns.

Developers produce code much faster than they can fully validate.

Reasoning behind a change does not always originate from the person who submits the PR.

Architectural coherence emerges only if the prompts used to generate code happen to align with the team’s collective philosophy.

And complexity, duplication, and inconsistency accumulate in places that teams do not immediately see.

This shift does not mean that AI harms productivity. It means the system changed in ways the old metrics do not capture. The faster the code is generated, the more critical it becomes to understand the cost of verification, the quality of generated logic, and the long-term stability of the codebase.

Productivity is no longer about creation speed.

It is about how all contributors, human and model, shape the system together.

To build an accurate measurement model, leaders need a grounded understanding of how LLMs behave inside real engineering workflows. These patterns are consistent across orgs that adopt AI deeply.

Two developers can use the same prompt but receive different structural patterns depending on model version, context window, or subtle phrasing. This introduces divergence in style, naming, and architecture.

Over time, these small inconsistencies accumulate and make the codebase harder to reason about.

This decreases onboarding speed and lengthens incident recovery.

Human-written code usually reflects a developer’s mental model.

AI-generated code reflects a statistical pattern.

It does not come with reasoning, context, or justification.

Reviewers are forced to infer why a particular logic path was chosen or why certain tradeoffs were made. This increases the cognitive load of every review.

When unsure, LLMs tend to hedge with extra validations, helper functions, or prematurely abstracted patterns. These choices look harmless in isolation because they show up as small diffs, but across many PRs they increase the complexity of the system. That complexity becomes visible during incident investigations, cross-service reasoning, or major refactoring efforts.

LLMs replicate logic instead of factoring it out.

They do not understand the true boundaries of a system, so they create near-duplicate code across files. Duplication multiplies maintenance cost and increases the amount of rework required later in the quarter.

Developers often use one model to generate code, another to refactor it, and yet another to write tests. Each agent draws from different training patterns and assumptions. The resulting PR may look cohesive but contain subtle inconsistencies in edge cases or error handling.

These behaviours are not failures. They are predictable outcomes of probabilistic models interacting with complex systems.

The question for leaders is not whether these behaviours exist.

It is how to measure and manage them.

Traditional metrics focus on throughput and activity.

Modern metrics must capture the deeper layers of the work.

Below are the three surfaces engineering leaders must instrument.

A PR with a high ratio of AI-generated changes carries different risks than a heavily human-authored PR.

Leaders need to evaluate:

This surface determines long-term engineering cost.

Ignoring it leads to silent drift.

Developers now spend more time verifying and less time authoring.

This shift is subtle but significant.

Verification includes:

This work does not appear in cycle time.

But it deeply affects morale, reviewer health, and delivery predictability.

A team can appear fast but become unstable under the hood.

Stability shows up in:

Stability is the real indicator of productivity in the AI era.

Stable teams ship predictably and learn quickly.

Unstable teams slip quietly, even when dashboards look good.

Below are the signals that reflect how modern teams truly work.

Understanding what portion of the diff was generated by AI reveals how much verification work is required and how likely rework becomes.

Measuring complexity on entire repositories hides important signals.

Measuring complexity specifically on changed files shows the direct impact of each PR.

Duplication increases future costs and is a common pattern in AI-generated diffs.

This includes time spent reading generated logic, clarifying assumptions, and rewriting partial work.

It is the dominant cost in LLM-heavy workflows.

If AI-origin code must be rewritten within two or three weeks, teams are gaining speed but losing quality.

Noise reflects interruptions, irrelevant suggestions, and friction during review.

It strongly correlates with burnout and delays.

A widening cycle time tail signals instability even when median metrics improve.

These metrics create a reliable picture of productivity in a world where humans and AI co-create software.

Companies adopting LLMs see similar patterns across teams and product lines.

Speed of creation increases.

Speed of validation does not.

This imbalance pulls senior engineers into verification loops and slows architectural decisions.

They carry the responsibility of reviewing AI-generated diffs and preventing architectural drift.

The load is significant and often invisible in dashboards.

Small discrepancies from model-generated patterns compound.

Teams begin raising concerns about inconsistent structure, uneven abstractions, or unclear boundary lines.

Models can generate correct syntax with incorrect logic.

Without clear reasoning, mistakes slip through more easily.

Surface metrics show improvement, but deeper signals reveal instability and hidden friction.

These patterns highlight why leaders need a richer understanding of productivity.

Instrumentation must evolve to reflect how code is produced and validated today.

Measure AI-origin ratio, complexity changes, duplication, review delays, merge delays, and rework loops.

This is the earliest layer where drift appears.

A brief explanation restores lost context and improves future debugging speed.

This is especially helpful during incidents.

Track how prompt iterations, model versions, and output variability influence code quality and workflow stability.

Sentiment combined with workflow signals shows where AI improves flow and where it introduces friction.

Reviewers, not contributors, now determine the pace of delivery.

Instrumentation that reflects these realities helps leaders manage the system, not the symptoms.

This shift is calm, intentional, and grounded in real practice.

Fast code generation does not create fast teams unless the system stays coherent.

Its behaviour changes with small variations in context, prompts, or model updates.

Leadership must plan for this variability.

Correctness can be fixed later.

Accumulating complexity cannot.

Developer performance cannot be inferred from PR counts or cycle time when AI produces much of the diff.

Complexity and duplication should be watched continuously.

They compound silently.

Teams that embrace this mindset avoid long-tail instability.

Teams that ignore it accumulate technical and organisational debt.

Below is a lightweight, realistic approach.

This helps reviewers understand where deeper verification is needed.

This restores lost context that AI cannot provide.

This reduces future rework and stabilises the system over time.

Verification is unevenly distributed.

Managing this improves delivery pace and morale.

These cycles remove duplicated code, reduce complexity, and restore architectural alignment.

New team members need to understand how AI-generated code behaves, not just how the system works.

Version, audit, and consolidate prompts to maintain consistent patterns.

This framework supports sustainable delivery at scale.

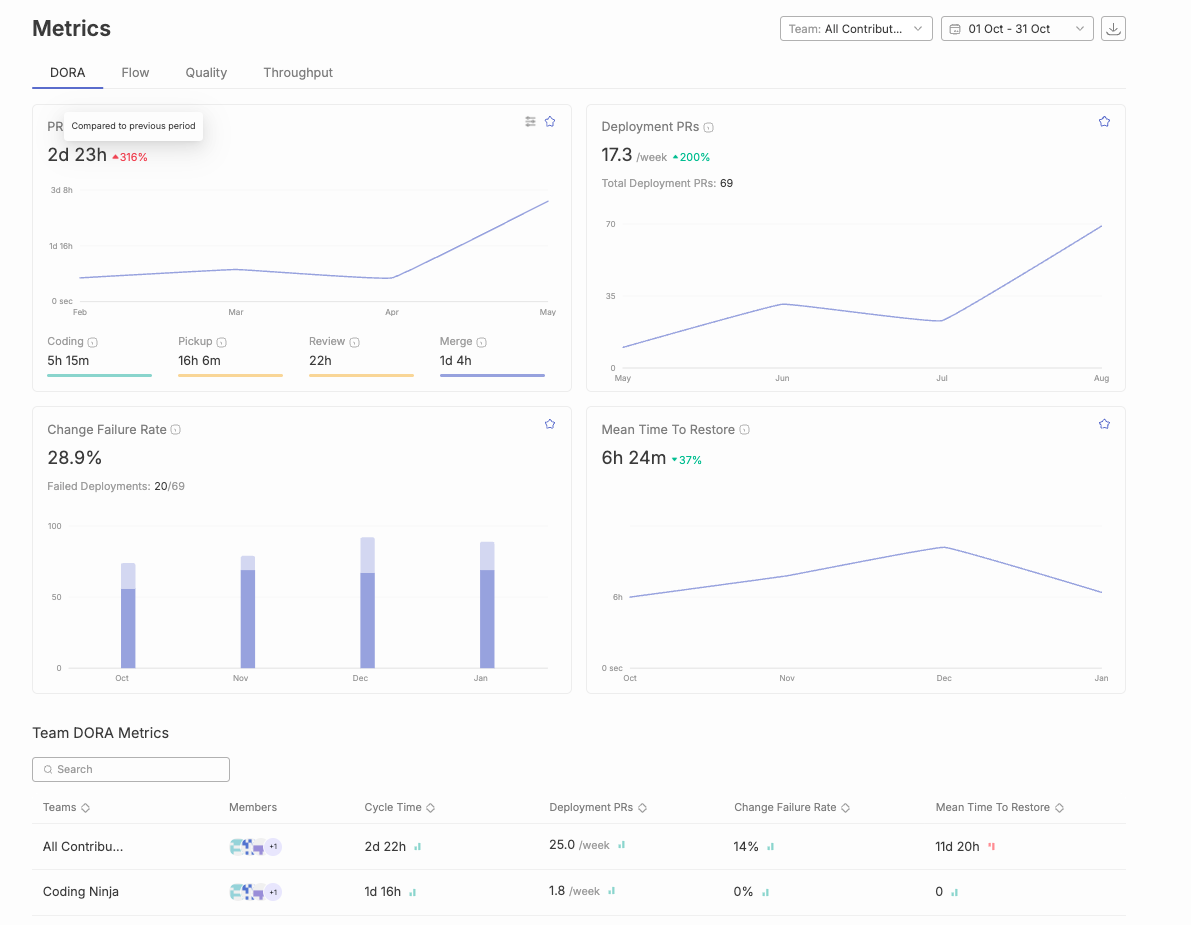

Typo provides visibility into the signals that matter most in an LLM-heavy engineering organisation.

It focuses on system-level health, not individual scoring.

Typo identifies which parts of each PR were generated by AI and tracks how these sections relate to rework, defects, and review effort.

Typo highlights irrelevant or low-value suggestions and interactions, helping leaders reduce cognitive overhead.

Typo measures complexity and duplication at the file level, giving leaders early insight into architectural drift.

Typo surfaces rework loops, shifts in cycle time distribution, reviewer bottlenecks, and slowdowns caused by verification overhead.

Typo correlates developer sentiment with workflow data, helping leaders understand where friction originates and how to address it.

These capabilities help leaders measure what truly affects productivity in 2026 rather than relying on outdated metrics designed for a different era.

LLMs have transformed engineering work, but they have also created new challenges that teams cannot address with traditional metrics. Developers now play the role of validators and maintainers of probabilistic code. Reviewers spend more time reconstructing reasoning than evaluating syntax. Architectural drift accelerates. Teams generate more output yet experience more friction in converting that output into predictable delivery.

To understand productivity honestly, leaders must look beyond surface metrics and instrument the deeper drivers of system behaviour. This means tracking AI-origin code health, understanding verification load, and monitoring long-term stability.

Teams that adopt these measures early will gain clarity, predictability, and sustainable velocity.

Teams that do not will appear productive in dashboards while drifting into slow, compounding drag.

In the LLM era, productivity is no longer defined by how fast code is written.

It is defined by how well you control the system that produces it.

.png)

By 2026, AI is no longer an enhancement to engineering workflows—it is the architecture beneath them. Agentic systems write code, triage issues, review pull requests, orchestrate deployments, and reason about changes. But tools alone cannot make an organization AI-first. The decisive factor is culture: shared understanding, clear governance, transparent workflows, AI literacy, ethical guardrails, experimentation habits, and mechanisms that close AI information asymmetry across roles.

This blog outlines how engineering organizations can cultivate true AI-first culture through:

A mature AI-first culture is one where humans and AI collaborate transparently, responsibly, and measurably—aligning engineering speed with safety, stability, and long-term trust.

AI is moving from a category of tools to a foundational layer of how engineering teams think, collaborate, and build. This shift forces organizations to redefine how engineering work is understood and how decisions are made. The teams that succeed are those that cultivate culture—not just tooling.

An AI-first engineering culture is one where AI is not viewed as magic, mystery, or risk, but as a predictable, observable component of the software development lifecycle. That requires dismantling AI information asymmetry, aligning teams on literacy and expectations, and creating workflows where both humans and agents can operate with clarity and accountability.

AI information asymmetry emerges when only a small group—usually data scientists or ML engineers—understands model behavior, data dependencies, failure modes, and constraints. Meanwhile, the rest of the engineering org interacts with AI outputs without understanding how they were produced.

This creates several organizational issues:

Teams defer to AI specialists, leading to bottlenecks, slower decisions, and internal dependency silos.

Teams don’t know how to challenge AI outcomes or escalate concerns.

Stakeholders expect deterministic outputs from inherently probabilistic systems.

Engineers hesitate to innovate with AI because they feel under-informed.

A mature AI-first culture actively reduces this asymmetry through education, transparency, and shared operational models.

Agentic systems fundamentally reshape the engineering process. Unlike earlier LLMs that responded to prompts, agentic AI can:

This changes the nature of engineering work from “write code” to:

Engineering teams must upgrade their culture, skills, and processes around this agentic reality.

Introducing AI into engineering is not a tooling change—it is an organizational transformation touching behavior, identity, responsibility, and mindset.

Teams must adopt continuous learning to avoid falling behind.

Bias, hallucinations, unsafe generations, and data misuse require shared governance.

PMs, engineers, designers, QA—all interact with AI in their workflows.

Requirements shift from tasks to “goals” that agents translate.

Data pipelines become just as important as code pipelines.

Culture must evolve to embrace these dynamics.

An AI-first culture is defined not by the number of models deployed but by how AI thinking permeates each stage of engineering.

Everyone—from backend engineers to product managers—understands basics like:

This removes dependency silos.

Teams continuously run safe pilots that:

Experimentation becomes an organizational muscle.

Every AI-assisted decision must be explainable.

Every agent action must be logged.

Every output must be attributable to data and reasoning.

Teams must feel safe to:

This prevents blind trust and silent failures.

AI shortens cycle time.

Teams must shorten review cycles, experimentation cycles, and feedback cycles.

AI usage becomes predictable and funded:

Systems running multiple agents coordinating tasks require new review patterns and observability.

Review queues spike unless designed intentionally.

Teams must define risk levels, oversight rules, documentation standards, and rollback guardrails.

AI friction, prompt fatigue, cognitive overload, and unclear mental models become major blockers to adoption.

Teams redefine what it means to be an engineer: more reasoning, less boilerplate.

AI experts hoard expertise due to unclear processes.

Agents generate inconsistent abstractions over time.

More PRs → more diffs → more burden on senior engineers.

Teams blindly trust outputs without verifying assumptions.

Developers lose deep problem-solving skills if not supported by balanced work.

Teams use unapproved agents or datasets due to slow governance.

Culture must address these intentionally.

Teams must be rebalanced toward supervision, validation, and design.

Rules for:

Versioned, governed, documented, and tested.

Every AI interaction must be measurable.

Monthly rituals:

Blind trust is failure mode #1.

Typo is the engineering intelligence layer that gives leaders visibility into whether their teams are truly ready for AI-first development—not merely using AI tools, but culturally aligned with them.

Typo helps leaders understand:

Typo identifies:

Leaders get visibility into real adoption—not assumptions.

Typo detects:

This gives leaders clarity on when AI helps—and when it slows the system.

Typo’s continuous pulse surveys measure:

These insights reveal whether culture is evolving healthily or becoming resistant.

Typo helps leaders:

Governance becomes measurable, not manual.

AI-first engineering culture is built—not bought.

It emerges through intentional habits: lowering information asymmetry, sharing literacy, rewarding experimentation, enforcing ethical guardrails, building transparent systems, and designing workflows where both humans and agents collaborate effectively.

Teams that embrace this cultural design will not merely adapt to AI—they will define how engineering is practiced for the next decade.

Typo is the intelligence layer guiding this evolution: measuring readiness, adoption, friction, trust, flow, and stability as engineering undergoes its biggest cultural shift since Agile.

It means AI is not a tool—it is a foundational part of design, planning, development, review, and operations.

Typo measures readiness through sentiment, adoption signals, friction mapping, and workflow impact.

Not if culture encourages reasoning and validation. Skill atrophy occurs only in shallow or unsafe AI adoption.

No—but every engineer needs AI literacy: knowing how models behave, fail, and must be reviewed.

Typo detects review noise, AI-inflated diffs, and reviewer saturation, helping leaders redesign processes.

Blind trust. The second is siloed expertise. Culture must encourage questioning and shared literacy.

A few years back, Agile was born out of a need to break free from rigid, waterfall-style development. It promises faster delivery, happier teams, and better products. However, for many organizations, Agile looks more like a checklist than a mindset.

With AI, remote teams, and DevOps integrations becoming the norm, the classic Agile playbook needs a modern update.

Agile methodologies have been continuously evolving. Since its inception, Agile has seen a remarkable transformation and has seen widespread adoption among organizations. This is because it breaks down the rigidity of traditional approaches and helps teams to deal with complexities and rapid changes effectively.

However, many organizations are still facing significant challenges in their agile journey. Due to a rise in distributed teams, organizations find it difficult to shift, as Agile was primarily built for in-person teams. It is also seen that organizational culture and regulatory requirements may conflict with Agile values, which is causing hesitation among tech leaders.

Agile is equated to simply moving fast or doing more in less time. But this isn’t correct. Going forward with this superficial concept leads to focusing on terminologies without a genuine mindset change, further resulting in poor outcomes and disengaged teams. Teams must understand that Agile isn’t just a set of processes or checklists. They must understand the core concept and intent behind the practice.

Resistance comes from fear of the unknown, loss of control, and negative past experiences. However, Agile isn’t only a process change. It is also a cultural shift, i.e., how organizations think and operate. When organizations resist change, this leads to incomplete adoption of Agile practices, resulting in poor engagement and negative product quality. To overcome this, organizations must openly communicate, provide consistent training, and cultivate trust and psychological safety.

A key mistake organizations make is relating Agile to abandoning planning and structure. Adopting Agile practices encourages flexibility, but it also values clear goals and measurable milestones to guide progress. Without these, teams lose direction and miss deadlines, resulting in chaos among them.

Rigidly following Scrum ceremonies, obsessing over metrics, or prioritizing tools over the people using them can backfire. When every task becomes a checkbox, it stifles creativity and collaboration. True Agile adoption means valuing individuals, team well-being, and cross-functional collaboration over strict adherence to tools and processes.

Hybrid Agile frameworks combine Agile with complementary methods to offer a flexible and structured approach. Two of them are Scrumban and SAFe.

ScrumBan blends Scrum’s structured sprints with Kanban's visual workflow to manage unpredictable workload and address stakeholder needs. This framework is highly flexible, which allows teams to adjust their processes and workflows based on real-time feedback and changing priorities.

SAFe is suited for large organizations to coordinate multiple teams. Teams are organized into ARTs, which are long-lived teams of Agile teams that plan, commit, and execute together. It supports regular retrospectives and inspect-and-adapt cycles to improve processes and respond to change.

Integrating Agile with DevOps practices enables frequent, reliable releases. While Agile provides the framework for iterative, customer-focused development, DevOps supplies the automation and operational discipline needed to deliver those iterations quickly. This helps deliver high-quality software to end users and supports rapid response to customer feedback. It also breaks down silos between development and operations to foster better teamwork and freeing them to focus on innovation and value-adding activities.

Traditional Agile practices were designed for software development, where work is predictable and requirements are clear. However, with ever-evolving tech, there is a rise in AI/ML projects that are highly iterative and experimental. Fostering a culture where decisions are based on data and experimental results and automating model validation, data quality checks, and performance monitoring helps AI/ML and data teams work more efficiently. Customizing Agile practices to support experimentation and flexibility allows these teams to deliver innovative solutions.

In modern Agile, teams must shift from output to outcome metrics. While output metrics focus on hitting velocity or story point targets, outcome metrics aim to deliver real value based on user behaviour, business impact, or customer success. These metrics bridge the gap between product strategy and Agile delivery. It fosters innovation as Agile teams explore diverse solutions to achieve goals and encourage thinking critically about priorities and making data-informed decisions.

Slack is an instant messaging tool that enables software development teams to organize their conversation into specific topics or team channels. This allows for more effective communication and supports sync and async conversations. Slack can be seamlessly integrated with over 2600 popular collaboration and productivity tools.

JIRA is a leading collaboration tool for software development teams that supports Scrum and Kanban boards. It allows them to plan, track, and manage their project efficiently. It provides issue tracking, sprint planning, and custom workflows to suit your development process.

ClickUp is an all-in-one platform that provides task tracking, documentation, agile boards, and plotting projects and tasks visually. It helps structure the work hierarchy, i.e., breaking down into spaces, folders, lists, and tasks. ClickUp can also be integrated with third-party applications, including Slack, Google Calendar, and Hubspot.

Zoho Projects is a popular project management tool that allows developers to create, assign, track tasks, and time spent on them. It also provides insights into project efforts and resource allocations. It can also be integrated with Zoho’s other services, such as Zoho Books and Zoho’s Finance Suite, as well as third-party apps like MS Office, Zapier, and Google Drive.

GitHub Actions is an automation platform that enables teams to automate software development workflows directly within GitHub repositories. It is primarily used for CI/CD workflows that allow developers to automatically build, test, and deploy code. It also helps create custom workflows using YAML files to automate a wide range of tasks.

Circle CI is a leading cloud native CI/CD platform that allows developers to rapidly build, test, and deploy applications at scale. It offers built-in security and compliance tools. Circle CI can be seamlessly integrated with third-party applications like GitHub, GitLab, Slack, Docker and Terraform.

Selenium is a popular test automation tool for web browsers. It supports multiple programming languages such as Python, JavaScript (Node.js), Ruby, and C#. It provides end-to-end test automation and can be integrated with various frameworks such as Cucumber to implement Behaviour-driven development.

Katalon is a no-code, low-code, and code-based test automation tool. It generates test reporting and tracks test execution results with built-in reporting capabilities. It also provides a detailed solution for end-to-end testing of mobile and web applications. Katalon can be integrated with popular CI/CD tools like Jenkins, Azure DevOps, and GitHub Actions.

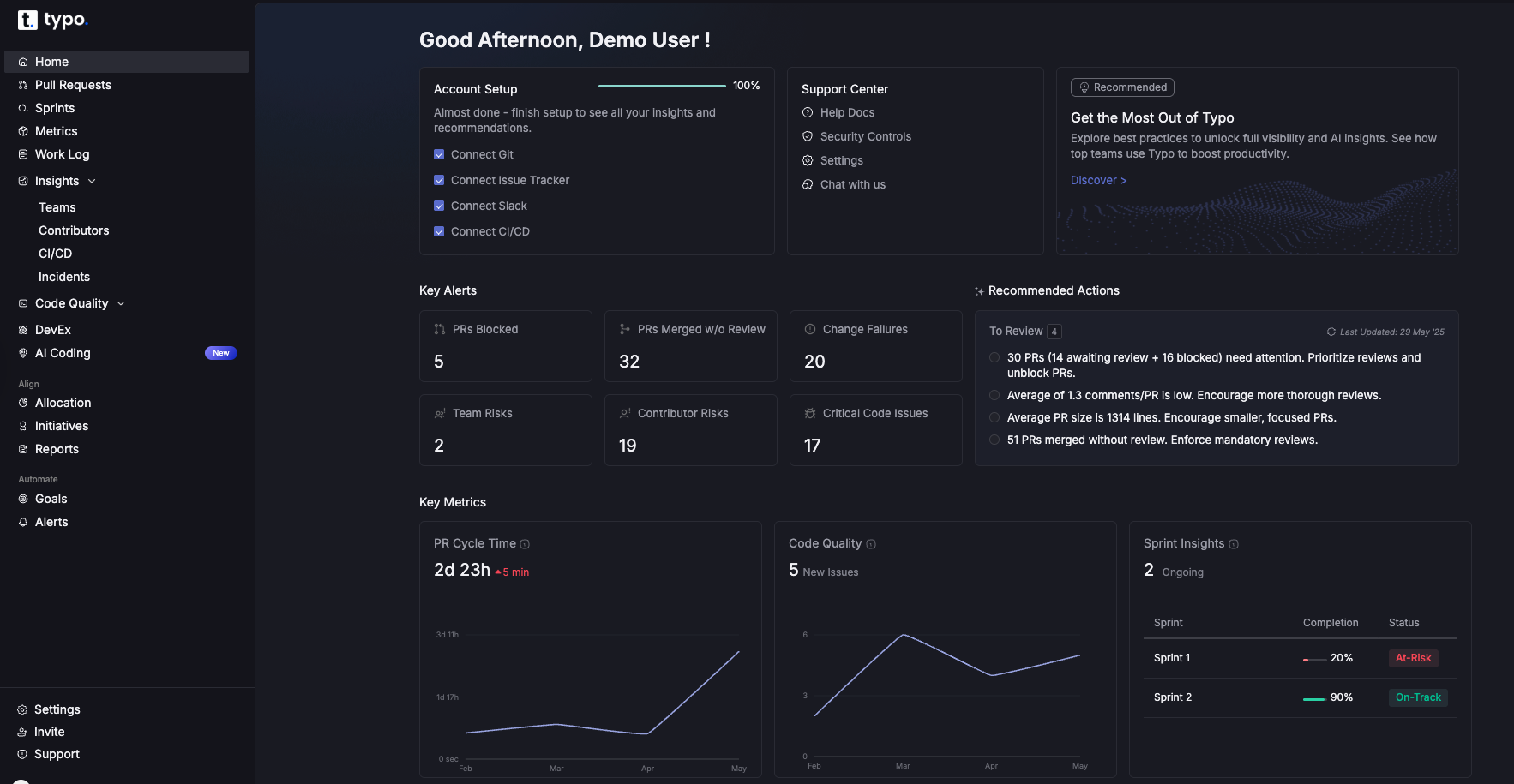

Typo is a well-known engineering analytics platform that helps software teams gain visibility into SDLC, identify bottlenecks, and automate workflows. It connects engineering data with business goals and uses AI to provide insights into developer workload and identify areas for improvement. Typo can be integrated with various applications such as GitHub, GitLab, JIRA, Jenkins, and Slack.

Agile, at its heart, is all about learning, adapting, and delivering value. Modern software development doesn’t need a new methodology. It needs a more honest, adaptable version of what we already have. That means adapting the framework to the real world: remote teams, fast-changing requirements, and evolving technologies.

After all, real agile happens when teams shift from checking boxes to creating value.

The software engineering industry is diverse and spans a variety of job titles that can vary from company to company. Moreover, this industry is continuously evolving, which makes it difficult to clearly understand what each title actually means and how to advance in these positions.

Given below is the breakdown of common engineering job titles, their responsibilities, and ways to climb the career ladder.

Software engineering represents a comprehensive and dynamic discipline that leverages engineering methodologies to architect, develop, and maintain sophisticated software systems. At its foundation, software engineering encompasses far more than code generation—it integrates the complete software development lifecycle, spanning initial system architecture and design through rigorous testing protocols, strategic deployment, and continuous maintenance optimization. Software engineers serve as the cornerstone of this ecosystem, utilizing their technical expertise to analyze complex challenges and deliver scalable, high-performance solutions that drive technological advancement.

Within this evolving landscape, diverse software engineer classifications emerge, each reflecting distinct experience trajectories and operational responsibilities. Junior software engineers typically focus on mastering foundational competencies while supporting cross-functional development teams, whereas senior software engineers and principal engineers tackle sophisticated architectural challenges and mentor emerging talent. Positions such as software engineer II represent intermediate-level roles where professionals are expected to contribute autonomously and resolve increasingly complex technical problems. As market demand for skilled software engineers continues to accelerate, understanding these software engineering classifications and their strategic contributions proves essential for professionals seeking to optimize their career trajectory or organizations aiming to build robust engineering teams.

Chief Technology Officer (CTO) is the highest attainable post in software engineering. The Chief Technology Officer is a key member of the executive team, responsible for shaping the company's technology strategy and working closely with other executives to ensure alignment with business goals. They are multi-faceted and require a diverse skill set. Any decision of theirs can either make or break the company. While their specific responsibilities depend on the company’s size and makeup, a few common ones are listed below:

In startups or early-stage companies, the Chief Technology Officer may also serve as a technical co-founder or technical co, deeply involved in selecting technology stacks, designing system integrations, and collaborating with other executive leaders to set the company’s technical direction.

In facing challenges, the CTO must work closely with stakeholders, board members, and the executive team to align technology initiatives with overall business goals.

Vice President of Engineering (VP of Engineering) is one of the high-level executives who reports directly to the CTO. As a vice president, this senior executive is responsible for overseeing the entire engineering department, shaping technical strategy, and managing large, cross-functional teams within the organizational hierarchy. The Vice President of Engineering also actively monitors the team's progress to ensure continuous improvement in performance, workflow, and collaboration. They have at least 10 years of experience in leadership. They bridge the gap between technical execution and strategic leadership and ensure product development aligns with the business goals.

Not every company includes a Director of Engineering. Usually, the VP or CTO takes their place and handles both responsibilities. This role requires a combination of technical depth, leadership, communication, and operational excellence. They translate strategic goals into day-to-day operations and delivery.

Software Engineering Managers are mid-level leaders who manage both people and technical know-how. As software engineering managers, they are responsible for leading teams, making key decisions, and overseeing software development projects. They have a broad understanding of all aspects of designing, innovation, and development of software products and solutions.

Principal Software Engineers are responsible for strategic technical decisions at a company’s level. They may not always manage people directly, but lead by influence. Principal software engineers may also serve as chief architects, responsible for designing large-scale computing systems and selecting technology stacks to ensure the technology infrastructure aligns with organizational strategy. They drive tech vision, strategy, and execution of complex engineering projects within an organization.

Staff Software Engineers, often referred to more generally as staff engineers, tackle open-ended problems, find solutions, and support team and organizational goals. They are recognized for their extensive, advanced technical skills and ability to solve complex problems.

Staff engineers may progress to senior staff engineer roles, taking on even greater leadership and strategic responsibilities within the organization. Both staff engineers and senior staff engineers are often responsible for leading large projects, mentoring engineering teams, and contributing to long-term technology strategy. These roles play a key part in risk assessment and cross-functional communication, ensuring that critical projects are delivered successfully and align with organizational objectives.

A Senior Software Engineer, often referred to as a senior engineer, assists software engineers with daily tasks and troubleshooting problems. Senior engineers typically progress from a mid level engineer role and may take on leadership positions such as team lead or tech lead as part of their career path. They have a strong grasp of both foundation concepts and practical implementation.

Leadership skills are essential for senior engineers, especially when mentoring junior team members or managing projects. Senior engineers, team leads, and tech leads are also responsible for debugging code and ensuring technical standards are maintained within the team. The career path for engineers often includes progression from mid level engineer to senior engineer, then to leadership positions such as team lead, tech lead, or engineering manager. In project management, team leads and tech leads play a key role in guiding teams and implementing new technologies.

A Software Engineer, also known as a software development engineer, writes and tests code. Entry-level roles such as junior software engineer and junior engineer focus on foundational skills, including testing code and writing test code to ensure software quality. They are early in their careers and focus mainly on learning, supporting, and contributing to the software development process under the guidance of senior engineers. Software Engineer III is a more advanced title, representing a higher level of responsibility and expertise within the software engineering career path.

Beyond the fundamental development positions, software engineering comprises an extensive spectrum of specialized roles that address distinct technical requirements and operational challenges within modern organizations. Software architects, for instance, are tasked with designing comprehensive structural frameworks and system blueprints for complex software ecosystems, ensuring optimal scalability, maintainability, and strategic alignment with overarching business objectives. Their deep expertise in architectural patterns and system design principles proves instrumental in facilitating technical guidance across development teams while establishing robust coding standards and best practices.

As technological advancements continue to reshape the industry landscape, unprecedented specialized roles have emerged to address evolving market demands. Machine learning engineers concentrate on architecting intelligent systems capable of autonomous learning from vast datasets, playing a pivotal role in developing sophisticated AI-driven applications and predictive analytics platforms. Site reliability engineers (SREs) ensure that software ecosystems remain robust, scalable, and maintain high availability metrics, effectively bridging software engineering methodologies with comprehensive IT operations management. DevOps engineers streamline and optimize the entire development lifecycle and deployment pipeline, fostering enhanced collaboration between development and operations teams to accelerate delivery timelines while improving overall system reliability and performance metrics.

These specialized roles comprise essential components for organizations aiming to maintain competitive advantages and drive technological innovation within their respective markets. By thoroughly understanding the unique operational responsibilities and technical skill sets required for each specialized position, companies can strategically assemble well-rounded software engineering teams capable of addressing diverse technical challenges and facilitating scalable solutions across complex development environments.

The comprehensive landscape of software engineering undergoes continuous transformation driven by AI-driven technological paradigms and dynamic industry requirements analysis. In recent operational cycles, transformative methodologies such as cloud-native architectures, artificial intelligence frameworks, and machine learning algorithms have fundamentally reshaped how software engineers approach complex problem-solving scenarios and streamline development workflows. The accelerating emphasis on cybersecurity protocols and data privacy compliance has simultaneously introduced sophisticated challenges and strategic opportunities for software engineering professionals seeking to optimize their technical capabilities.

Industry-specific variations demonstrate significant impact on defining operational responsibilities and performance expectations for software engineers across diverse sectors. For instance, technology-focused organizations typically prioritize rapid innovation cycles, deployment velocity, and adoption of cutting-edge technological stacks, while traditional enterprise environments often emphasize seamless integration of software solutions into established business process workflows. These fundamental differences influence comprehensive project scopes, from the types of development initiatives engineers execute to the specific technology architectures and deployment methodologies they implement for optimal performance.

Maintaining comprehensive awareness of industry trend patterns and understanding how various sectors approach software engineering optimization proves crucial for professionals seeking to advance their technical career trajectories. This strategic knowledge also enables organizations to adapt their development methodologies, attract top-tier technical talent, and construct resilient, future-ready engineering teams capable of delivering scalable, high-performance solutions that align with evolving market demands and technological advancement cycles.

Software engineers leverage some of the most optimized compensation architectures in the contemporary job market ecosystem, reflecting the exponential demand trajectory for their specialized technical competencies and domain expertise. Compensation algorithms vary based on multifaceted parameters including geographical data points, industry verticals, experience matrices, and specific role taxonomies. For instance, entry-level software engineers typically initialize with robust baseline compensation packages, while senior software engineers, principal architects, and those occupying specialized technical niches can command substantially enhanced remuneration structures, frequently surpassing $200,000 annually within leading technological innovation hubs and high-performance computing environments.

Beyond foundational salary frameworks, numerous organizations deploy comprehensive benefit optimization strategies to attract and retain top-tier software engineering talent pools. These sophisticated packages may encompass equity participation mechanisms, performance-driven bonus algorithms, flexible work arrangement protocols, and enterprise-grade health insurance infrastructures. Select companies additionally provision professional development acceleration programs, wellness optimization initiatives, and generous paid time-off allocation systems that enhance overall talent retention metrics and employee satisfaction indices.

Understanding the compensation optimization potential and benefit architecture frameworks associated with diverse software engineering role classifications empowers technical professionals to execute data-driven career trajectory decisions and enables organizations to maintain competitive positioning in attracting skilled engineering resources. This strategic comprehension facilitates optimal resource allocation and ensures sustainable talent acquisition pipelines within the rapidly evolving technological landscape.

How do organizational frameworks and cultural architectures impact software engineering talent acquisition and retention strategies? Establishing robust company culture and clearly defined organizational values represents critical infrastructure components in attracting and retaining high-caliber software engineering professionals. Organizations that architect environments fostering innovation ecosystems, collaborative workflows, and continuous learning frameworks demonstrate significantly higher success rates in building high-performing software engineering teams. When software engineers experience comprehensive support systems, value recognition protocols, and empowerment mechanisms to contribute strategic ideas, they exhibit enhanced engagement metrics and demonstrate elevated motivation levels to drive measurable results across development lifecycles.

What role do diversity, equity, and inclusion frameworks play in modern software engineering organizations? Diversity, equity, and inclusion (DEI) initiatives have evolved into fundamental pillars within the software engineering landscape, representing not merely compliance requirements but strategic advantages for organizational excellence. Companies that prioritize and systematically implement these values through structured methodologies attract broader candidate pools while simultaneously leveraging diverse perspectives that fuel enhanced creativity algorithms and sophisticated problem-solving capabilities. Transparent communication protocols, achievement recognition systems, and structured professional growth pathways further optimize employee satisfaction metrics and retention analytics, creating sustainable talent management ecosystems.

How can organizations leverage cultural intelligence to create optimal software engineering environments? By comprehensively understanding and strategically implementing company culture frameworks and organizational value systems, enterprises can architect environments where software engineers demonstrate peak performance capabilities, resulting in accelerated innovation cycles, enhanced productivity metrics, and sustainable long-term organizational success. These cultural optimization strategies create symbiotic relationships between individual professional development and organizational objectives, establishing foundations for continuous improvement and scalable growth patterns across software engineering operations.

Constant learning is the key. In the AI era, one needs to upskill continuously. Prioritize both technical aspects and AI-driven areas, including machine learning, natural language processing, and AI tools like GitHub Copilot. You can also pursue certification, attend a workshop, or enroll in an online course. This will enhance your development process and broaden your expertise.

Constructive feedback is the most powerful tool in software engineering. Receiving feedback from peers and managers helps to identify strengths and areas for growth. You can also leverage AI-powered tools to analyze coding habits and performance objectively. This provides a clear path for continuous improvement and development.

Technology evolves quickly, especially with the rise of Generative AI. Read industry blogs, participate in webinars, and attend conferences to stay up to date with established practices and latest trends in AI and ML. This helps to make informed decisions about which skills to prioritize and which tools to adopt.

Leadership isn't only about managing people. It is also about understanding new methods and tools to enhance productivity. Collaborate with cross-functional teams, leverage AI tools for better communication and workflow management. Take initiative in projects, mentor and guide others towards innovative solutions.

Understanding the career ladder involves mastering different layers and taking on more responsibilities. You should be aware of both traditional roles and emerging opportunities in AI and ML. Moreover, soft skills, including communication, mentorship, and decision making, are as critical as the above-mentioned skills. This will help to prepare you to climb the ladder with purpose and clarity.

With the constantly evolving software engineering landscape, it is crucial to understand the responsibilities of each role clearly. By upskilling continuously and staying updated with the current trends, you can advance confidently in your career. The journey might be challenging, but with the right strategy and mindset, you can do it. All the best!

Starting a startup is like setting off on an adventure without a full map. You can’t plan every detail, instead you need to move fast, learn quickly, and adapt on the go. Traditional Software Development Life Cycle (SDLC) methods, like Waterfall, are too rigid for this kind of journey.

That’s why many startups turn to Lean Development: a faster, more flexible approach grounded in lean philosophy, which emphasizes waste reduction and continuous improvement. Lean Software Development is a translation of lean manufacturing principles and practices to the software development domain, drawing its philosophy from the manufacturing industry where lean principles were pioneered to optimize production and assembly lines.

Key benefits of Lean Development for startups include:

Lean Software Development is considered an integral part of the Agile software development methodology, and the lean agile approach combines principles from both Lean and Agile to optimize workflows and foster team responsibility.

Lean and Agile share roots in the agile manifesto and agile methodology, which emphasize iterative progress, collaboration, and responsiveness to change. Lean Software Development was popularized by Mary and Tom Poppendieck in their 2003 book, "Lean Software Development: An Agile Toolkit."

The Lean approach is often associated with the Minimum Viable Product (MVP) strategy, enabling rapid deployment and iterative feedback to refine products efficiently.

In this blog, we’ll explore what Lean Development is, how it compares to other methods, and the key practices startups use to build smarter and grow faster.

The lean SDLC model focuses on reducing waste and maximizing value to create high-quality software. Lean focuses on improving processes and efficiency, while Agile focuses on enhancing products. Adopting lean development practices within the SDLC helps minimize risks, reduce costs, and accelerate time to market. Lean Software Development encourages a culture of continuous improvement and learning within teams, emphasizing the learning process through short iteration cycles, feedback sessions, and ongoing communication. Implementing lean concepts involves assessing current processes, training teams in lean principles, and piloting projects to test and scale successful strategies. Lean Software Development promotes team empowerment and collaboration among team members, encouraging teams to break down problems into constituent elements to optimize workflow and foster team unity. Lean software development promotes a collaborative environment and empowers team members. The agile community acts as a supportive network that promotes the adoption and adaptation of Lean and Agile practices. Both Lean and Agile emphasize continuous improvement and accountability within product development teams, with Lean guided by the principles of lean software. Lean Software Development is implemented through specific agile practices and agile frameworks such as Scrum and Kanban, which are based on agile principles.

Lean development is especially effective for startups because it enables them to bring their product to market quickly, even with limited resources. This model emphasizes adaptability, customer feedback, and iterative processes.

Benefits of Lean Development:

The foundational principles of lean software development methodologies trace their origins to advanced manufacturing optimization systems, specifically the revolutionary Toyota Production System (TPS) implemented during the 1980s. This groundbreaking production framework fundamentally transformed manufacturing workflows by implementing systematic waste elimination protocols and value stream optimization techniques designed to maximize customer-centric deliverables. The TPS methodology gained widespread industry recognition for its capability to streamline production pipelines, optimize resource allocation algorithms, and deliver superior quality outputs through minimal resource consumption patterns while maintaining operational efficiency benchmarks.

The 1990s witnessed a paradigm shift when "The Machine That Changed The World" publication catalyzed global awareness of lean manufacturing principles, demonstrating how these optimization methodologies could be systematically adapted across diverse industry verticals beyond traditional automotive production systems. As software development landscapes evolved through technological advancement cycles, industry thought leaders and methodology architects began recognizing the transformative potential of applying lean optimization principles to software development lifecycle (SDLC) processes. The early 2000s marked a critical inflection point with the publication of "Lean Software Development: An Agile Toolkit" by Mary and Tom Poppendieck, which served as a comprehensive methodology framework that systematically adapted core lean manufacturing principles to software engineering practices. This influential methodology guide introduced the seven fundamental lean principles that now serve as operational guidelines for modern lean software development implementations. Today, lean development approaches function as essential architectural frameworks for both emerging startups and enterprise-level organizations, enabling development teams to eliminate process inefficiencies, optimize customer value delivery mechanisms, and implement continuous improvement protocols throughout their development workflows.

In Traditional models like Waterfall, the requirements are locked in at the beginning. The agile methodology is guided by the agile manifesto, which outlines core agile principles such as customer collaboration, iterative progress, and responsiveness to change. Agile uses specific agile frameworks like Scrum and Kanban to implement these principles, emphasizing iterative development through sprints and regular feedback. Lean software development is considered an integral part of the Agile software development methodology, and the lean agile approach combines principles from both to optimize workflow and foster team responsibility. While Agile development shares some similarities with Lean, Lean places an even greater emphasis on minimizing waste and improving processes, whereas Agile focuses on enhancing products. Both Lean and Agile emphasize continuous improvement, accountability within product development teams, and utilize iterative development to enable rapid updates and responsiveness to feedback.

The first principle of Lean methodology is waste elimination, which involves identifying and eliminating non-value-adding activities such as inefficient processes, excessive documentation, or redundant meetings. The Lean software development process includes the identification and elimination of waste to optimize efficiency. Regular meetings are held by project managers to identify and eliminate waste such as unnecessary code and process delays. Instead, the methodology prioritizes tasks that directly add value to products or the customer experience. This allows the development team to optimize their efforts, deliver value to customers effectively, and avoid multitasking, which can dilute focus.

Lean development focuses on creating value, reducing waste, and prioritizing build quality throughout the software development process. Build quality is a key focus of Lean Development, emphasizing the importance of build integrity to ensure software is reliable, adaptable, and maintainable. Building quality involves preventing waste while maintaining high standards, often through test-driven development and regular feedback. Software that has bugs and errors reduces the customer base, which can further impact quality. The second principle states that software issues must be solved immediately, not after the product is launched in the market. Methodologies such as pair programming and test-driven development help increase product quality and maintain a continuous feedback loop.

The market environment is constantly changing, and customers' expectations are growing. This principle prioritizes learning as much as possible before committing to serious, irreversible decisions. It helps avoid teams getting trapped by decisions made early in the development process, encouraging them to commit only at the last responsible moment. Prepare a decision-making model that outlines the necessary steps and gather relevant data to enable fast product delivery and continuous learning.

One of the key principles of the lean SDLC model is to deliver fast. This means building a simple solution, bringing it to market quickly, and enhancing it incrementally based on customer feedback. In Lean Software Development, team releases often involve launching a minimum viable product (MVP) to gather user feedback and guide future improvements. The MVP strategy focuses on rapid development of products with limited functionality and launching them to the market to gauge user reaction. Speed to market is a competitive advantage in the software industry, allowing teams to test assumptions early. It also enables better adjustment of the product to current customer needs in subsequent iterations, saving money and making the development process more result-oriented.

This principle states that people are the most valuable asset in an organization. The product development team is responsible for delivery and continuous improvement, making their empowerment crucial to project success. Agile teams, which operate within Lean and Agile frameworks, play a key role in continuous improvement and accountability through practices like sprints and retrospectives. Empowered teams with high autonomy report better engagement, job satisfaction, and ownership over outcomes. Lean Software Development promotes team empowerment and collaboration among team members. Respecting teamwork focuses on empowering team members and fostering a collaborative environment, especially under tight deadlines. When working together, it is important to respect each other despite differences. Lean development focuses on identifying gaps in the work process that might lead to challenges and conflicts. A few ways to minimize these gaps include encouraging open communication, valuing diverse perspectives, and creating a productive, innovative environment by respecting and nurturing talent.

The learning process in Lean SDLC model is continuous, enabling teams to improve through short iteration cycles, feedback sessions, and ongoing communication with customers. Learning usually takes place in one of three areas: new technologies, new skills, or a better understanding of users’ wants and needs. This lean principle focuses on amplifying learning by creating and retaining knowledge. Amplifying learning is achieved through practices like code reviews and paired programming to ensure knowledge is shared among team members. Learning is further enhanced through extensive code reviews and cross-team meetings in Lean Software Development. This is achieved by providing the necessary infrastructure to properly document and preserve valuable insights. Various methods for creating and retaining knowledge include user story development, pair programming, knowledge-sharing sessions, and thoroughly commented code.

This principle emphasizes optimizing the entire process and value stream rather than focusing on individual processes. It highlights the importance of viewing software delivery as an interconnected system, where improving one part in isolation can create bottlenecks elsewhere. Optimize the Whole encourages focusing on the entire value stream instead of isolated parts, ensuring that improvements benefit the overall flow and efficiency. Techniques to optimize the whole include value stream mapping, enhancing cross-functional collaboration, reducing handoff delays, and ensuring smooth integration between teams.

Within the framework of lean software development methodologies, customer feedback transcends traditional quality assurance checkpoints to function as the primary algorithmic driver influencing architectural decisions, feature prioritization matrices, and development velocity optimization. The lean development paradigm necessitates comprehensive stakeholder requirement analysis and seamless integration of user input streams throughout the entire Software Development Life Cycle (SDLC), establishing robust feedback loop mechanisms that enable rapid hypothesis validation, dynamic feature adjustment protocols, and real-time market demand calibration through continuous integration and deployment pipelines.