Scrum is known to be a popular methodology for software development. It concentrates on continuous improvement, transparency, and adaptability to changing requirements. Scrum teams hold regular ceremonies, including Sprint Planning, Daily Stand-ups, Sprint Reviews, and Sprint Retrospectives, to keep the process on track and address any issues.

Agile Maturity Metrics are often adopted to assess how thoroughly a team understands and implements Agile concepts. However, there are several dimensions to consider when evaluating their effectiveness.

These metrics typically attempt to quantify a team's grasp and application of Agile principles, often focusing on practices such as Test-Driven Development (TDD), vertical slicing, and definitions of "Done" and "Ready." Ideally, they should provide a quarterly snapshot of the team's Agile health.

The primary goal of Agile Maturity Metrics is to encourage self-assessment and continuous improvement. They aim to identify areas of strength and opportunities for growth in Agile practices. By evaluating different Agile methodologies, teams can tailor their approaches to maximize efficiency and collaboration.

Instead of relying solely on maturity metrics:

While Agile Maturity Metrics have their place in assessing a team’s Agile journey, they should be used in conjunction with other evaluative tools to overcome inherent limitations. Emphasizing adaptability, transparency, and honest self-reflection will yield a more accurate reflection of Agile competency and drive meaningful improvements.

Story Point Velocity is often used by Scrum teams to measure progress, but it's essential to be aware of its intrinsic limitations when considering it as a performance metric.

One major drawback is inconsistency across teams. Story Points lack a standardized value, meaning one team's interpretation can significantly differ from another's. This variability makes it nearly impossible to compare teams or aggregate their performance with any accuracy.

Story Points are most effective within a specific team over a brief period. They assist in gauging how much work might be accomplished in a single Sprint, but their reliability diminishes over more extended periods as teams may adjust their estimation models.

As teams evolve, they may choose to renormalize what a Story Point represents. This adjustment is made to reflect changes in team dynamics, skills, or understanding of the work involved. Consequently, comparing long-term performance becomes unreliable because past and present Story Points may not represent the same effort or value.

The scope of Story Points is inherently limited to within a single team. Using them outside this context for any comparative or evaluative purpose is discouraged. Their subjective nature and variability between teams prevent them from serving as a solid benchmark in broader performance assessments.

While Story Point Velocity can be a useful tool in specific scenarios, its effectiveness as a performance metric is limited by issues of consistency, short-term utility, and context restrictions. Teams should be mindful of these limitations and seek additional metrics to complement their insights and evaluations.

Understanding the distinction between bugs and stories in a Product Backlog is crucial for maintaining a streamlined and effective development process. While both contribute to the overall quality of a product, they serve unique purposes and require different methods of handling.

In summary, maintaining a clear distinction between bugs and stories isn't just beneficial; it's necessary. It allows for an organized approach to product development, ensuring that teams can address critical issues promptly while continuing to innovate and enhance. This balance is key to retaining a competitive edge in the market and ensuring ongoing user satisfaction.

When it comes to assessing Agile maturity, the focus often lands on individual perceptions of Agile concepts like TDD, vertical slicing, and definitions of "done" and "ready." While these elements seem crucial, relying heavily on self-assessment can lead to misleading conclusions. Team members may overestimate their grasp of Agile principles, while others might undervalue their contributions. This discrepancy creates an inaccurate gauge of true Agile maturity, making it a metric that can be easily manipulated and perhaps not entirely reliable.

Story point velocity is a traditional metric frequently used to track team progress from sprint to sprint. However, it fails to provide a holistic view. Teams could be investing time on bugs, spikes, or other non-story tasks, which aren’t reflected in story points. Furthermore, story points lack a standardized value across teams and time. A point in one team's context might not equate to another's, making inter-team and longitudinal comparisons ineffective. Therefore, while story points can guide workload planning within a single team's sprint, they lose their utility when used outside that narrow scope.

Evaluating quality by the number and severity of bugs introduces another problem. Assigning criticality to bugs can be subjective, and this can skew the perceived importance and urgency of issues. Different stakeholders may have differing opinions on what constitutes a critical bug, leading to a metric that is open to interpretation and manipulation. This ambiguity detracts from its value as a reliable measure of quality.

In summary, traditional metrics like Agile maturity self-assessments, story point velocity, and bug severity often fall short in effectively measuring Scrum team performance. These metrics tend to be subjective, easily influenced by individual biases, and lack standardization across teams and over time. For a more accurate assessment, it’s crucial to develop metrics that consider the unique dynamics and context of each Scrum team.

With the help of DORA DevOps Metrics, Scrum teams can gain valuable insights into their development and delivery processes.

In this blog post, we discuss how DORA metrics help boost scrum team performance.

DevOps Research and Assessment (DORA) metrics are a compass for engineering teams striving to optimize their development and operations processes.

In 2015, The DORA (DevOps Research and Assessment) team was founded by Gene Kim, Jez Humble, and Dr. Nicole Forsgren to evaluate and improve software development practices. The aim is to enhance the understanding of how development teams can deliver software faster, more reliably, and of higher quality.

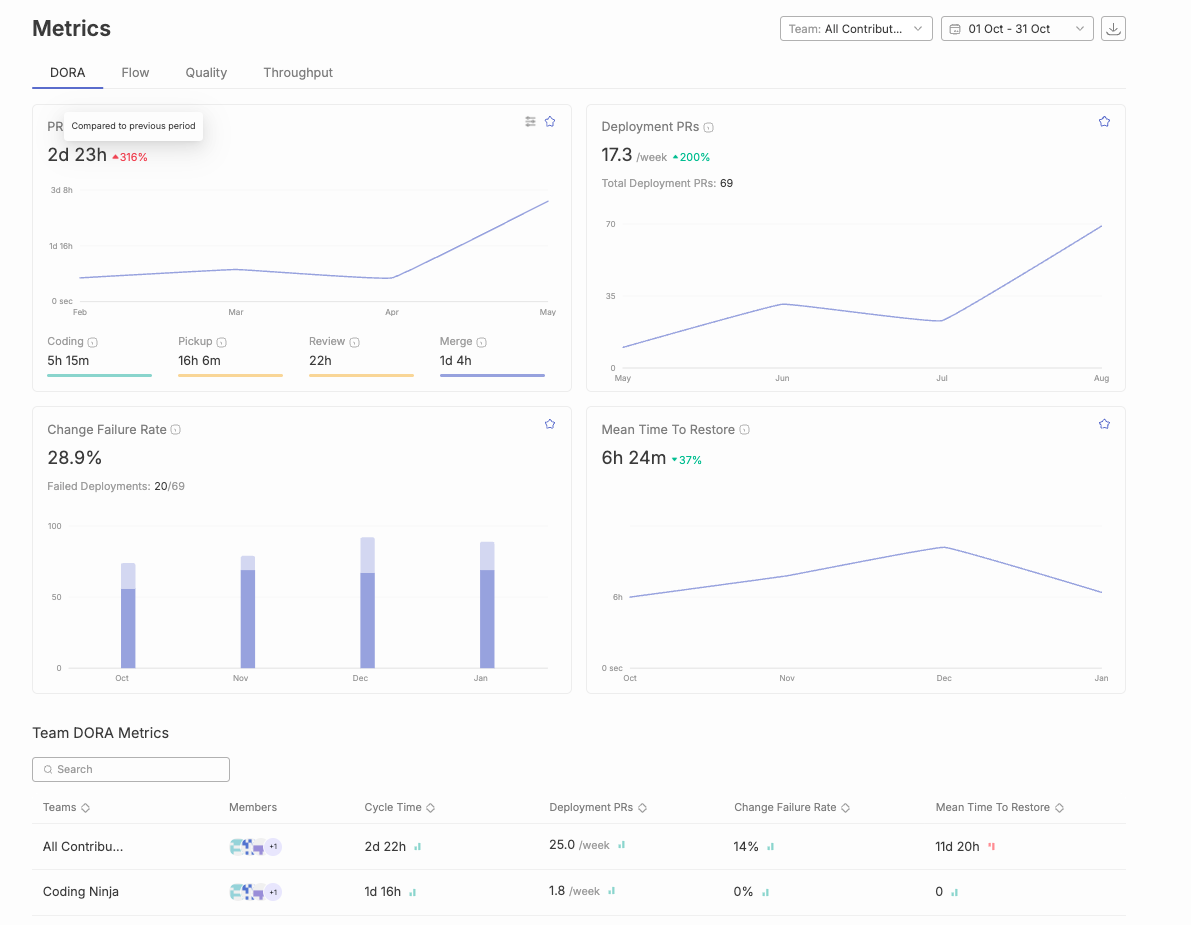

Four key DORA metrics are:

Other DORA metrics can also be used to provide a more comprehensive view of software delivery performance, complementing the four metrics and helping teams balance speed and quality.

Reliability is a fifth metric that was added by the DORA research team in 2021. It is based upon how well your user’s expectations are met, such as availability and performance, and measures modern operational practices. It doesn’t have standard quantifiable targets for performance levels rather it depends upon service level indicators or service level objectives.

Organizations looking to improve their software delivery performance can implement DORA metrics to benchmark, track progress, and identify areas for improvement. DORA metrics encourage collaboration between development and operations teams, leading to the formation of multidisciplinary teams and breaking down silos between development and operations teams. By adopting the four DORA metrics, teams can make data-driven decisions to enhance both speed and stability in their DevOps practices.

Wanna Improve your Team Performance with DORA Metrics?

DORA metrics are useful for Scrum team performance because they provide key insights into the software development and delivery process. These metrics offer objective data for evaluating a team's performance and identifying areas for improvement. By helping to measure performance and enable teams to identify areas for improvement, DORA metrics drive operational performance and improve developer experience.

DORA metrics track crucial KPIs such as deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate, with each metric measuring a specific aspect of software delivery performance. These metric measures help Scrum teams understand their efficiency and identify areas for improvement. Shorter lead times in Change Lead Time measurements indicate faster delivery of value.

In addition to DORA metrics, Agile Maturity Metrics can be utilized to gauge how well team members grasp and apply Agile concepts. These metrics can cover a comprehensive range of practices like Test-Driven Development (TDD), Vertical Slicing, and Definitions of Done and Ready. Regular quarterly assessments can help teams reflect on their Agile journey.

Teams can streamline their software delivery process and reduce bottlenecks by monitoring deployment frequency and lead time for changes. Tracking from code commit to deployment helps measure lead time for changes and identify delays. Monitoring DORA metrics also helps teams identify bottlenecks in the development pipeline, allowing for targeted improvements at each stage. Optimizing deployment pipelines and deployment processes is important to improve deployment efficiency and productivity. Continuous integration plays a key role in ensuring code quality and deployment success throughout the development cycle. Hence, leading to faster delivery of features and bug fixes. Optimizing effective code review processes, including automation, is crucial to reduce change failure rates and improve deployment quality. Creating smaller pull requests and using smaller pull requests can increase deployment frequency and reduce lead time for changes by making work more manageable and streamlining the release process. Another key metric is Story Point Velocity, which provides insight into how a team performs across sprints. This metric can be more telling when combined with an analysis of time spent on non-story tasks such as bugs and spikes.

Tracking the change failure rate and MTTR helps software teams focus on improving the reliability and stability of their applications. These reliability metrics are measured in the production environment, where a production failure can have a significant impact on users and business operations. Addressing failures quickly is crucial to minimize downtime and restore normal service as soon as possible. Robust testing processes, especially automated testing, help catch bugs early, speed up the release cycle, and improve deployment reliability. Automated testing reduces the change failure rate and boosts overall software quality, resulting in more stable releases and fewer disruptions for users. When measuring deployment frequency, it is important to focus on successful deployments to production, as this reflects the team's ability to deliver value reliably. Consistently high change failure rate undermines the effectiveness of deployment frequency and lead time for changes.

Summing these at sprint’s end gives a clear view of improvement in handling defects.

DORA metrics give clear data that helps teams decide where to improve, making it easier to prioritize the most impactful actions for better performance and enhanced customer satisfaction. DORA metrics create a shared language and common goals across teams, helping to break down silos.

Incorporating customer feedback alongside DORA metrics enables teams to prioritize improvements that matter most to users, ensuring that changes address real customer needs and drive meaningful value.

Regularly reviewing these metrics encourages a culture of continuous improvement. Measuring deployment frequency over time helps teams track their progress and set improvement goals. This helps software development teams to set goals, monitor progress, and adjust their practices based on concrete data.

DORA metrics allow DevOps teams to compare their performance against industry standards or other teams within the organization. By leveraging industry benchmarks, teams can identify gaps and prioritize areas for improvement. Top performing teams typically deploy code multiple times a day and recover from failures quickly, while average teams often take about a week to deploy changes, highlighting the value of striving for higher efficiency and agility. This encourages healthy competition and drives overall improvement.

DORA metrics provide actionable data that helps Scrum teams identify inefficiencies and bottlenecks in their processes. Analyzing value streams and the entire software delivery process enables teams to pinpoint where value flow is obstructed and optimize the end-to-end delivery pipeline. Using flow metrics to measure and optimize the value stream from development to delivery helps assess the efficiency and impact of the entire value stream, leading to improved business outcomes. Analyzing these metrics allows engineering leaders to make informed decisions about where to focus improvement efforts and reduce recovery time. By incorporating both DORA and other Agile metrics, teams can achieve a holistic view of their performance, ensuring continuous growth and adaptation.

Firstly, understand the importance of DORA Metrics as key metrics for measuring software delivery performance, since each metric provides insight into different aspects of the development and delivery process. Together, these metrics offer a comprehensive view of the team’s performance and allow them to make data-driven decisions.

Scrum teams should start by setting baselines for each metric to get a clear starting point and set realistic goals. For instance, if a scrum team currently deploys once a month, it may be unrealistic to aim for multiple deployments per day right away. Instead, they could set a more achievable goal, like deploying once a week, and gradually work towards increasing their frequency.

Scrum teams must schedule regular reviews (e.g., during sprint retrospectives) to discuss the metrics to identify trends, patterns, and anomalies in the data. This helps to track progress, pinpoint areas for improvement, and further allow them to make data-driven decisions to optimize their processes and adjust their goals as needed.

Use the insights gained from the metrics to drive ongoing improvements and foster a culture that values experimentation and learning from mistakes. By creating this environment, Scrum teams can steadily enhance their software delivery performance. Note that, this approach should go beyond just focusing on DORA metrics. it should also take into account other factors like developer productivity and well-being, collaboration, and customer satisfaction.

Encourage collaboration between development, operations, and other relevant teams to share insights and work together to address bottlenecks and improve processes. Make the metrics and their implications transparent to the entire team. You can use the DORA Metrics dashboard to keep everyone informed and engaged.

When evaluating Scrum teams, traditional metrics like velocity and hours worked can often miss the bigger picture. Instead, teams should concentrate on meaningful outcomes that reflect their real-world impact, such as improving customer retention through frequent and reliable deployments. Here are some alternative metrics to consider:

Focusing on these outcomes shifts the attention from internal team performance metrics to the broader impact the team has on the organization and its customers. This approach not only aligns with agile principles but also fosters a culture centered around continuous improvement and customer value.

In today's fast-paced business environment, effectively measuring the performance of Scrum teams can be quite challenging. This is where the principles of Evidence-Based Management (EBM) come into play. By relying on EBM, organizations can make informed decisions through the use of data and empirical evidence, rather than intuition or anecdotal success stories.

1. Objective Metrics: EBM encourages the use of quantifiable data to assess outcomes. For Scrum teams, this might include metrics like sprint velocity, defect rates, or customer satisfaction scores, providing a clear picture of how the team is performing over time.

2. Continuous Improvement: EBM fosters an environment of continuous learning and adaptation. By regularly reviewing data, Scrum teams can identify areas for improvement, tweak processes, and optimize their workflows to become more efficient and effective.

3. Strategic Decision-Making: EBM allows managers and stakeholders to make strategic decisions that are grounded in reality. By understanding what truly works and what does not, teams are better positioned to allocate resources effectively, set achievable goals, and align their efforts with organizational objectives.

In conclusion, the integration of Evidence-Based Management into the Scrum framework offers a robust method for measuring team performance. It emphasizes objective data, continuous improvement, and strategic alignment, leading to more informed decision-making and enhanced organizational performance.

Transitioning to a new framework like Scrum can breathe life into a team's workflow, providing structure and driving positive change. Yet, as the novelty fades, teams may slip into a mindset where they believe there's nothing left to improve. Here's how to tackle this mentality:

Regular retrospectives are key to ongoing improvement. Instead of focusing solely on what's working, encourage team members to explore areas of stagnation. Use creative retrospective formats like Sailboat Retrospective or Starfish to spark fresh insights. This can reinvigorate discussions and spotlight subtle areas ripe for enhancement.

Instill a culture of continuous improvement by introducing clear, objective metrics. Tools such as cycle time, lead time, and work item age can offer insights into process efficiency. These metrics provide concrete evidence of where improvements can be made, moving discussions beyond gut feeling.

Encourage team members to pursue new skills and certifications. This boosts individual growth, which in turn enhances team capabilities. Platforms like Coursera or Khan Academy offer courses that can introduce new practices or methodologies, further refining your Scrum process.

Create an environment where feedback is not only welcomed but actively sought after. Continuous feedback loops, both formal and informal, can identify blind spots and drive progress. Peer reviews or rotating leadership roles can keep perspectives fresh.

Sometimes, complacency arises from routine. Rotate responsibilities within the team or introduce new challenges to encourage team members to think creatively. This could involve tackling a new type of project, experimenting with different tools, or working on cross-functional initiatives.

By making these strategic adjustments, Scrum teams can maintain their momentum and uncover new avenues for growth. Remember, the journey of improvement is never truly complete. There's always a new horizon to reach.

Typo is a powerful tool designed specifically for tracking and analyzing DORA metrics. It provides an efficient solution for DevOps and Scrum teams seeking precision in their performance measurement.

Wanna Improve your Team Performance with DORA Metrics?

Divided Focus: Juggling dual responsibilities often leads to neglected duties. Balancing the detailed work of a developer with the overarching team-care responsibilities of a Scrum Master can scatter attention and dilute effectiveness. Each role demands a full-fledged commitment for optimal performance.

Prioritization Conflicts: The immediate demands of coding tasks can overshadow the broader, less tangible obligations of a Scrum Master. This misalignment often results in prioritizing development work over facilitating team dynamics or resolving issues.

Impediment Overlook: A Scrum Master is pivotal in identifying and eliminating obstacles hindering the team. However, when embroiled in development, there is a risk that the crucial tasks of monitoring team progress and addressing bottlenecks are overlooked.

Diminished Team Support: Effective Scrum Masters nurture team collaboration and efficiency. When their focus is divided, the encouragement and guidance needed to elevate team performance might fall short, impacting overall productivity.

Burnout Risk: Balancing two demanding roles can lead to fatigue and burnout. This is detrimental not only to the individual but also to team morale and continuity of workflow.

Ineffective Communication: Clear, consistent communication is the cornerstone of agile success. A dual-role individual might struggle to maintain ongoing dialogue, hampering transparency and slowing down decision-making processes.

Each of these challenges underscores the importance of having dedicated roles in a team structure. Balancing dual roles requires strategic planning and sharp prioritization to ensure neither responsibility is compromised.

Leveraging DORA Metrics can transform Scrum team performance by providing actionable insights into key aspects of development and delivery. When implemented the right way, teams can optimize their workflows, enhance reliability, and make informed decisions to build high-quality software.